In-app surveys are a great way to connect with your audience and involve them in improving your app. The benefit over sending out surveys via email is that you’re targeting your users where they’re at, while they're already engaging with your app, which can result in higher response rates and more valuable insights.

In-app surveys—pop-ups that can take the form of modal or fullscreen overlays triggered off backend events—capture user feedback on your app in general or specific features and actions in particular, which can then be used to inform the product roadmap.

It’s important to note that in-app surveys are different from asking your users to review your app on the Shopify App Store. For more information about the right way to ask users for reviews, check out our article on How to Get Reviews for Your Shopify Apps.

For example, when Elba Ornelas, Senior UX Designer at global product development company Wizeline, was the design lead for an app to help reduce a significant amount of food waste in Wizeline’s offices, an in-app survey was implemented to gather feedback, invite users to suggest new features, and enable them to easily report bugs. As a result, certain feature releases were prioritized while other initiatives were paused to maximize the value the app would bring to users.

“People using the app constantly praised our openness and transparency to continuously improve it,” Ornelas explains. “At the same time, it gave our development team a clear birds-eye view of the issues in the app, which optimized our response time.”

For this article, we talked to six experts across user experience (UX) research and design, user engagement and customer retention, as well as form and survey design. Their tips will help you create in-app surveys that users will actually want to fill out, and collect and analyze relevant data that you can use to improve your app.

1. Don’t disrupt the user experience

Our experts agreed that surveys—especially in-app surveys—can be a tough sell because people generally don’t like to be interrupted. Before you design a survey, always consider other ways to gather the data you need to make a decision.

“They are using your app to do a job or accomplish a task,” explains market and UX research consultant Lauren Isaacson. “Your need for data to prove that you and your team are doing a great job and deserve a raise is interrupting what the user wants to do. Think very carefully before implementing an in-app survey.”

Your need for data is interrupting what the user wants to do. Think very carefully before implementing an in-app survey.

Also, keep in mind where your survey sits in your app ecosystem. Isaacson advises asking yourself if the in-app survey is the only interruption within the user experience, or if it’s one of many divergent pop-ups and ads asking for newsletter signups or displaying product recommendations.

“People don’t like to be taken away from whatever they’re trying to do with the app,” adds Caroline Jarrett, forms specialist and author of Surveys That Work. “They never want to answer the question, ‘Are you willing to do a survey?’. We are all subjected to far too many survey invitations and requests to leave feedback.”

Try to avoid third-party survey platforms

Find a way to allow users to answer your survey without taking them to another website and think twice about using third-party survey services, such as Typeform and SurveyMonkey.

“They’re great for easily collecting information thanks to their WYSIWYG-builder and integration with other services,” acknowledges Alexandre Gorius, Senior Mobile Growth Consultant and In-App Messages Expert at Phiture. “But they create friction in the user experience.”

Prompting users to give feedback interrupts their workflow. It’s an even worse experience when a user is sent out of your app to a survey environment that doesn’t—or barely—matches your brand and design guidelines.

Alternatively, you can either develop your own in-app survey or use a marketing automation platform like Braze, Iterable, Clevertap, or Batch, which take the HTML of your in-app messages and help segment the survey to the right audience, triggering it when needed and saving the feedback in your users’ profiles without requiring any development. Services such as Phiture’s Braze extension B.Layer can then help you create and design your in-app survey and make sure it follows your brand guidelines.

For another way to collect early negative experiences, Gorius recommends emails as being more relevant as churned users might never come back to see the feedback prompt waiting for their return in your app.

You might also like: 9 Pro Tips to Create a Stellar App Customer Experience.

2. Keep your survey short

Once you’ve decided an in-app survey is the best approach for what you want to do, make sure you keep it as short as possible.

“A lot of survey thinking is still dominated by the 1950s’ mindset of the ‘Big Honkin’ Survey,’ with dozens of questions. That was really the only choice when all surveys were done by mail-out packages, face-to-face interviews, or in-person telephone calls,” Jarrett says.

Instead, Jarrett encourages app developers to aim for the “Light Touch Survey” instead, which is so much easier to do now directly from your app. The lightest of Light Touch Surveys has three questions, all optional: one that asks the user to choose from options you offer, one open question where they can say what they like, and one question that gives you some idea about the respondent.

For example, you could ask the user “Are you enjoying this app?” with the following response options:

- Love it

- It’s okay

- Neutral

- Poor

- Hate it

This question can be followed by an open box question such as “Any comment?”, which allows the user to elaborate on their answer and provide more qualitative information.

You can then finish with “How often do you use our app?” and provide options like:

- First time

- Once a month or less

- About once a week

- Most days

Keep it light and don’t start the survey with, “Are you willing to answer our survey?” as it will only help you to learn that just a tiny proportion of users will answer with “Yes.”

Isaacson goes one step further and usually advises her clients to use only two questions. The first should be a rating scale measuring whatever key factor you or your client’s company wants to excel at, and the second question should be asking respondents to explain their previous answer.

Here’s an example:

1. How easy or difficult was it to complete what you wanted to do using this app?

- Very easy

- Easy

- Neutral

- Difficult

- Very difficult

2. Can you please tell us why you gave that rating?

- [multi-line open end]

3. Devise effective rating scale questions

When you’re formulating your questions, the most commonly used approaches include either a Net Promoter Score (NPS) or a Likert Scale to measure the success of your app.

For an NPS you’d ask, “On a scale of 0-10, how likely is it that you would recommend this app?” to evaluate how loyal your users are towards your app and how satisfied they are with it.

Likert data, on the other hand, focuses on attitudes. Respondents rate multiple items on a labeled scale, most commonly from 1-5, to quantify their agreement, satisfaction or behavioral frequency on a variety of topics.

“Decide the single most crucial factor your app needs users to achieve, such as ease, quickness, happiness, or enlightenment, and devise a question around it,” Isaacson says.

You can use a five-point scale as above or a seven-point scale like this:

- How easy or difficult was it to complete what you wanted to do using this app?

- Very easy

- Easy

- Somewhat easy

- Neutral

- Somewhat difficult

- Difficult

- Very difficult

Isaacson points out that five-point scales are easier for users to answer, while seven-point scales provide a little extra nuance. Both are perfectly fine to use. Just remember to have your critical positive factor on one end and the opposite of that factor on the other.

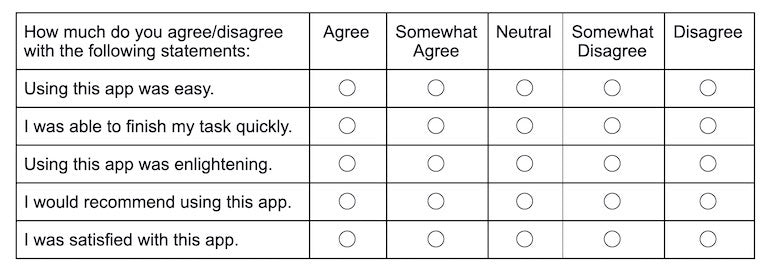

Isaascson also warns against cheating by using one question with a multi-factor answer grid, like this:

“Such a grid is not one question,” Isaacson points out. “That’s five questions stuffed into a one-question trench coat. You know you’re cheating, and your users will know you’re cheating, too.”

4. Make sure you also include open-ended questions

When running in-app surveys, it can be easy to think that all data will be quantitative. However, Laura Herman, a UX research expert, strongly recommends including some qualitative, open-ended questions alongside quantitative, scalar questions to understand the context behind people's responses.

“Open-ended questions provide an opportunity to understand the why behind the users’ responses,” Herman explains. “For instance, one user may say they are ‘extremely dissatisfied’ with your app, but when you ask them why, they might say that it’s because they’re dissatisfied with their job, which they’re performing using your app. Numbers alone won’t tell the full story.”

Ornelas agrees and points out that a score that rates your product falls short into digging deeper into how to improve your app.

“Be intentional with the data you’re gathering,” she says. “Treat this as a magnificent opportunity to research and discover improvement areas.”

You might also like: Conducting User Interviews: How To Do It Right.

5. Remove friction and motivate users to respond to your survey

One of the biggest challenges of any survey is to actually get people to complete them. As haters and lovers of your product are the most likely to respond, it’s essential to increase the completion rate for the ones in-between, advises UX designer Zoltan Kollin, Senior Design Manager at IBM Watson Media.

Kollin says it’s important to:

- Only ask what’s really necessary

- Find the most effortless input fields, such as big radio buttons instead of dropdowns or sliders instead of numeric input fields

- Start with the most easy-to-answer question

- Choose the right time to ask

“Removing friction is a no-brainer,” he says. “Nothing can break the user experience as much as a distracting pop-up survey at the wrong time. Look at your journey map and identify opportunities where users are waiting or idle and likely open to answering your questions.”

Nothing can break the user experience as much as a distracting pop-up survey at the wrong time.

Understanding your users’ motivation is key, so Kollin suggests experimenting beyond material incentives, such as service discounts or a chance to win a prize.

“Some people want their voices to be heard,” he explains. “Some want to contribute to the success of the product. Some can’t resist gamification. The more personalization you can design into your surveying process, the more responses you’ll get.”

Isaacson also recommends making responding to your survey sound as appealing as possible. Use plain language, and ensure you say the survey is short and that the feedback will help improve the app. Give your users a reason to participate even when you’re doing a fine job.

6. Keep your sample to a minimum

It’s really tempting to bombard everyone with your survey request but Jarrett cautions that it’s annoying and reduces your response rate, which can harm your overall results.

“I like to use a maximum sample size of 100,” she says. “And make it clear that I’m only asking 100 people, which makes them feel special and more likely to answer. It also means you can run far more surveys because most of your users will rarely see one, so they won’t get worn out by your requests.”

7. Segment feedback and trigger it at the right time

The hardest part of collecting feedback is to segment and trigger your feedback prompt properly, finds Phiture’s Alexandre Gorius.

“Sending a feedback prompt after a happy moment will automatically bias your results and provide you with positive feedback,” he explains. “On the other hand, sending a feedback prompt the very first time someone uses your app is not ideal either, because they might not understand your service enough yet to have a proper opinion about it.”

In Gorius’ experience, the completion of a key action—such as a first, second or third sale—is a great moment to prompt feedback. It’s often the right place for an in-app product recommendation as well but because piling up pop-ups is never the right choice from a UX perspective, your feedback prompt may come at the expense of a product recommendation.

“To overcome this problem, you can segment your survey to a randomized 10 percent of users completing their first purchase, then to a different 10 percent completing their second purchase, and so on,” Gorius suggests. “This way, you maximize your revenue coming from recommendations while still collecting feedback at each step of a user’s lifecycle.”

8. Test your survey

Make sure you test every part of your app—the in-app survey is no exception.

“The best way to test your in-app survey is to run a normal usability test on your app,” Jarrett explains. “But include the survey in the app exactly as if you’d launched it to your planned sample. As you watch the participant use the app, observe what they are doing and then, of course, pay even more attention to what happens when they see that first survey question.”

If they click away from it straight away, then you’ve learned that your app is more important to them than the distraction of the survey. And you’ve also learned that you need to invest more work on crafting the first question to make it one that they really want to answer.

Jarrett says that writing survey questions is a skill that’s rather like learning to play the guitar: You can get some sort of sound out of it as a complete beginner but making the sorts of sounds people want to hear takes a lot of practice. The same applies to survey questions—anyone can write a beginner question but writing good survey questions takes effort and lots of testing.

9. Iterate and refine

Another advantage of running short surveys with small samples is that you can constantly improve and iterate.

“If people didn’t like the response options when you tested them, just scrap them and change them to ones that you think will work better,” Jarrett recommends. “Then test again. Your idea might be an improvement, but it might have other problems, and there may be yet another better idea. Iterate and refine.”

Ornelas agrees that you should evaluate your survey frequently.

“Before the latest survey went live, we iterated on the content, the flow, the questions, and the overall tone,” she recalls. “The survey was also a touchpoint of our product, so it needed to correspond to the tone, ease of use, and branding.”

10. Ensure your survey is accessible

Also apply the same accessibility best practices that you follow to make your app inclusive to your survey and ensure it complies with the Web Content Accessibility Guidelines.

Jarrett suggests asking yourself if your survey is:

- Perceivable: Does it have decent color contrast so people with low vision can read it easily, and does it work with a screen reader?

- Operable: Can someone use it with a keyboard or by dictating using a voice assistant?

- Understandable: Does it make sense to anyone including someone who might be stressed or who doesn’t read very well?

- Robust: Does it work properly across a wide range of devices including old discontinued ones and assistive technology?

Crucially, Jarrett also recommends testing your in-app survey with users who have disabilities.

“It’s definitely possible for something to be okay from the point of view of the guidelines, but still not be appropriate for someone with a disability or who happens not to fit the stereotype of a young, white, male,” she says. “For example, I’ve seen apps that had a selection of avatars—but none of them were suitable for an older person, let alone someone who uses a wheelchair.”

11. Analyze the survey results and calculate your margin of error

For the analysis of the data you gathered from your in-app survey, Isaacson recommends the Top Two Box method. It’ll help you determine what percentage of people answered positively, versus neutral or negatively. And if you went with a seven-point scale, add the top three boxes together.

Isaacson warns not to get too concerned when the Top Two Box score goes down or to celebrate if the score goes up by a few points. Instead calculate your margin of error using a sample size calculator.

Here are Isaacson’s steps to conduct a proper calculation:

- Enter the number of people who used your app within a specific time frame. That’s the population.

- Enter how many people answered your survey within that same period. That’s the sample.

- Then look at the margin of error.

“A professionally executed survey will typically have a five-point margin of error,” Isaacson says. “That means the results could actually be five points up or down from the reported results.”

And don’t forget to analyze the open-ended data as well.

“People freely telling you what they liked and didn’t like about your app is research gold. Use it. That data can alert you to unmet needs or minor problems that are on their way to being much more significant.”

People freely telling you what they liked and didn’t like about your app is research gold. Use it. That data can alert you to unmet needs or minor problems that are on their way to being much more significant.

Isaacson suggests adding the data manually by putting it into a spreadsheet and then tagging each entry with keywords to help find common themes between the different responses. Or, you can use an AI option if you're looking at more data than you can handle manually.

“But be warned that AI analysis tools are notoriously inaccurate,” Isaacson cautions. “Computers can't detect the nuance we humans pick up on instinctively.”

12. Conduct follow-up interviews

Another way to combine qualitative and quantitative data to get at the “why” is by carrying out follow-up interviews with a select handful of users.

UX research expert Laura Herman suggests adding a question at the end of the survey, asking if the respondent would be willing to participate in a short moderated user research session.

Just three to five interviews can completely transform your understanding of your users’ needs and behavior!

“If they are interested, let them provide their contact information for easy scheduling,” Herman advises. “Just three to five interviews can completely transform your understanding of your users’ needs and behavior!”

13. Consider the pros and cons of attitudinal research

A survey is a terrific example of attitudinal research: It provides insight into what users think or say. However, what users say they do is often quite different from what users actually do.

"What users say they do is often quite different from what users actually do."

“If asked about our exercise habits, for instance, most people will say they exercise regularly,” Herman explains. “But if a researcher were to observe us, our exercise patterns might be irregular, sporadic, or even nonexistent—particularly during a pandemic!”

Herman recommends thinking about how your research questions can best be answered: Is an attitudinal approach appropriate, or would you benefit from behavioral research, which tells us about what users do?

“If you’re curious about users’ satisfaction with, perceptions of, or associations with your product, then a survey can be a perfect fit,” Herman advises. “If you’re looking for underlying user needs and usage patterns, on the other hand, a behavioral approach would be better.”

Improve the user feedback loop with in-app surveys

In-app surveys can be an excellent tool to collect targeted user feedback about your app. As your users are already engaging with your product and you have their attention, it can be a good time to directly find out what they like and don’t like about your app or why they perform certain interactions.

In-app surveys are versatile and can be tailored to a specific part of your app or be triggered based on your users’ behavior, providing you with useful insights you can put into improving your app.

The trick is to ask the right questions at the right time, and design the survey in a way that doesn’t disrupt the user experience so your users actually want to fill it out. Follow the same usability and accessibility principles you keep in mind for the rest of your app, and don’t use an in-app survey in isolation. Complement it with moderated user research to get the best out of the data and really understand your users. When done right, capturing feedback within the context of your app while customers are using it can be invaluable.

Read more

- Creating User Centered Flows in Ecommerce Design

- Build for the 20 Percent: How Cleverific Evolved to Meet Merchant Needs

- How to Level Up Your App with Theme App Extensions

- 4 Must-Read Shopify Docs to Maximize your Success on the Shopify App Store

- Vue.js Tutorial — A Guide on Prototyping Web Apps

- 5 Community-Built API Tools Developers Should Check Out

- Shopify API Release: January 2021

- Shopify App Challenge Past Participants: Where Are They Now?

- Shopify App Store Staff Picks: Everything You Need to Know